Social Relation Recognition in Daily Life Photos - A Domain Based Approach

Authors

Qianru Sun, Mario Fritz and Bernt Schiele

Abstract

Social relations are the foundation of human daily life. Developing techniques to analyze such relations from visual data bears great potential to build machines that better understand us and are capable of interacting with us at a social level. Previous investigations have remained partial due to the overwhelming diversity and complexity of the topic and consequently have only focused on a handful of social relations. In this paper, we argue that the domain-based theory from social psychology is a great starting point to systematically approach this problem. The theory provides coverage of all aspects of social relations and equally is concrete and predictive about the visual attributes and behaviors defining the relations included in each domain. We provide the first dataset built on this holistic conceptualization of social life that is composed of a hierarchical label space of social domains and social relations. We also contribute the first models to recognize such domains and relations and find superior performance for attribute based features. Beyond the encouraging performance of the attribute based approach, we also find interpretable features that are in accordance with the predictions from social psychology literature. Beyond our findings, we believe that our contributions more tightly interleave visual recognition and social psychology theory that has the potential to complement the theoretical work in the area with empirical and data-driven models of social life.

5 Social Domains & 16 Social Relations

Figure 1. 5 social domains and 16 social relations

- Attachment domain (d0), characterized by proximity maintenance within a protective relationship. Human attributes such as age difference, proximity and the activity of seeking protection are social cues which can be visually recognizable. Specific relations are father-child, mother-child, grandpa-grandchild, grandma-grandchild (r0-r3).

- Reciprocity domain (d1), characterized by the negotiation of matched benefits with functional equality between people. Key features are the matched and mutually beneficial interactions in long-term accounting process. Typically, age difference among peers is small, which is an important semantic attribute. Also, mutual activities such as "gathering" and "sharing" often appear in this domain.Sequenced exchange of positive effect is another factor, which is hard to predict in an image but might be useful when employing video. Specific relations are friends, siblings and classmates (r4-r6).

- Mating domain (d2), concerned with the selection and protection of access to the sexual partner, e.g. the relationship between lovers. Gender cues and the behavior cue of caring offspring are essential for this domain. Bugental also emphasized the facial attractiveness of prospective partners suggesting that facial and most likely also full body appearance are important cues. Specific relation is lovers/spouses (r7).

- Hierarchical power domain (d3), characterized by using or expressing social dominance. Dominance appears in resource provision and threatening activities. Concrete examples are leaders, powerful peers and teachers. On the other hand, submissive activities like "listening" and "agreeing" are more adaptive for those who lack dominance. Specific relations are presenter-audience, teacher-student, trainer-trainee and leader-subordinate (r8-r11).

- Coalitional groups domain (d4), concerned with the identification of the lines dividing "us" and "them". The focus is on the grouping and conformity cues which ranges from colleagues at work, over sport team members to band members. Coalitional group members often share similar or identical clothing and perform joint activities. Specific relations are band members, dance team members, sport team members and colleagues (r12-r15).

PIPA-relation Dataset

Single body/face data, annotation labels and train-test-eval splits can be download here: data, labels, splits.

Image resource. The image resource People in Photo Album (PIPA) [3] was collected from Flickr photo albums for the task of person recognition. Photos from Flickr cover a wide range of social situations and are thus a good starting point for social relations. The same person often appears in different social scenarios and interacting with different people which make it ideal for our purpose. Identity information is used for selecting person pairs and defining train-validation-test splits. In summary, PIPA contains 37,107 photos with 63,188 instances of 2,356 identities. We extend the dataset by 26,915 person pair annotations for social relations.

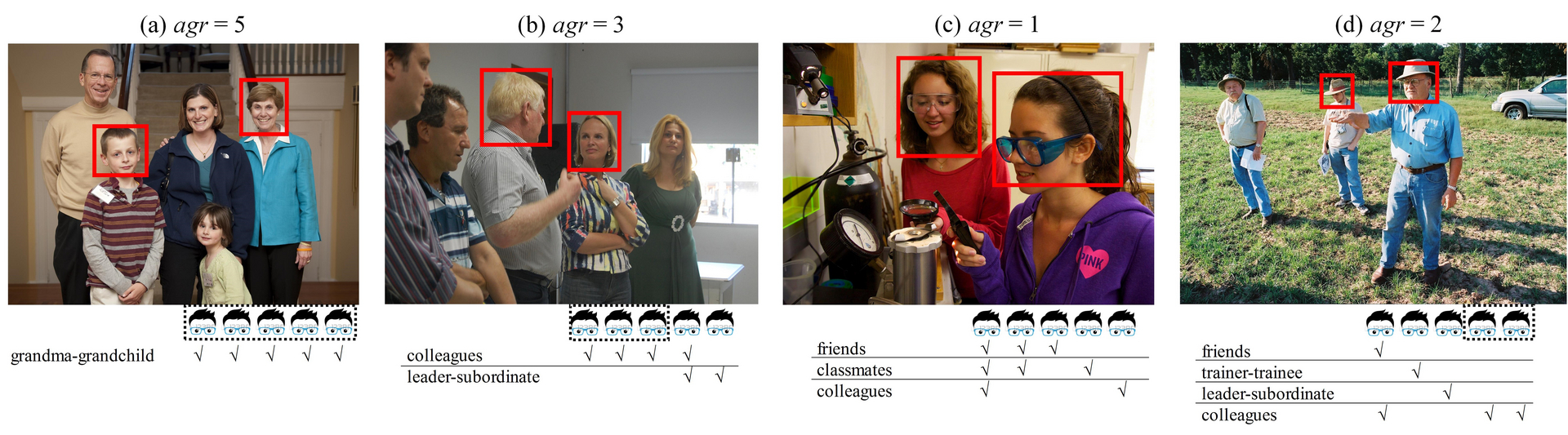

Annotators. Annotating social relations might be subjective and ambiguous. One reason is that a person pair may have multiple plausible relations, as shown in Figure 2. Another reason is that the definition of the same social relation might differ, depending on the cultural backgrounds of the annotators. We selected 5 annotators from Asia, Africa, Europe and America and gave them detailed explanations and photo examples to help them keep a basic consistency.

Annotation protocol

- For each annotated person, the head bounding box and identity number are available.

- The label space is hierarchical, by assigning social domain labels that partition the data into 5 domain classes as well as assigning a label for the particular relation that two persons appear to be in.

- Annotators are asked to individually annotate all person pairs for which we present pairs of head bounding boxes.

- For each pair the annotator can either pick a relation from our list or, if they are too uncertain, can skip this pair. For example, two people wearing uniforms and working in the factory should be labeled as "colleagues", as the cues of action "working", clothing "uniforms" and environment "factory" are obvious. If the annotators are uncertain they are asked to indicate this by clicking "maybe" for this relation.

- Based on our pre-annotation phase, we allowed at most 3 relation labels per person pair.

Semantic Attribute Models

Figure 4. A double-stream CaffeNet, which learns an end-to-end mapping from an image pair (a body pair or face pair) to either 5 domain classes or 16 relation classes.

End-to-end models (baseline). Traditional recognition of either social relations or domains can be achieved by end-to-end double-stream (2 person images are fed into 2 streams) as shown in Figure 4.

Fine-tuned CaffeNet and VGG models can be found here.

Semantic Attribute models. Inspired by social psychological definition of social domains, we propose an intermediate semantic attribute representation and use it for recognition. We investigate semantic head/body attribute categories mentioned in the definition of social domains [2]. Following are the explanations and data resources for totally 12 attribute categories. For each attribute category, we either leverage an existing attribute dataset to train a double-stream CaffeNet or use pre-trained models in previous works, such as immediacy and activity.

- Age: infant, child, young, middleAge, senior, unknown. Additionally, we add smallAgeDiff, middleAgeDiff, largeAgeDiff, because age difference is important for distinguishing social relations. We use head age and body age respectively trained on the head and body images of PIPA.

- Gender: male, female. We add sameGender, diffGender. Same with the age case, we use head gender and body gender.

- Location & Scale: composed of 4-dim location coordinates (x, y, width, height), relativeDistance (far, close) and relativeSizeRatio (large, small). Directly collected from head/body locations in PIPA.

- Head appearance: totally 40 attributes such as straight hair, wavy hair, wearing earring, wearing hat and so on. The model is trained on the Celebfaces Attributes dataset (CelebA).

- Head pose & face emotion: Poses are frontal, left, right, up and down. Emotions are anger, happiness, sadness, surprise, fear, disgust and neutral. Both models are trained on the India Movie Face database (IMFDB).

- Clothing: longHair, glasses, hat, tShirt, shorts, jeans, longPants and longSleeves. The model is trained on the Berkeley People Attribute dataset.

- Immediacy & activity: directly use the published models of immediacy and activity.

All fine-tuned models using different attribute datasets can be found here.

An implementation with tensorflow can be found here.

References

[1] Sun, Q., Schiele, B. and Fritz, M.: A Domain Based Approach to Social Relation Recognition. CVPR 2017. [Paper]

[2] Bugental, D.B.: Acquisition of the Algorithms of Social Life: A Domain-Based Approach. Psychological Bulletin, Vol. 126, No. 2, pp. 187-219, 2000.

[3] Zhang, N., Paluri, M., Taigman, Y., Fergus, R. and Bourdev, L.: Beyond frontal faces: Improving person recognition using multiple cues. CVPR 2015. pp. 4804-4813.