Lucid Data Dreaming for Object Tracking

Anna Khoreva, Rodrigo Benenson, Eddy Ilg,

Thomas Brox and Bernt Schiele

Abstract

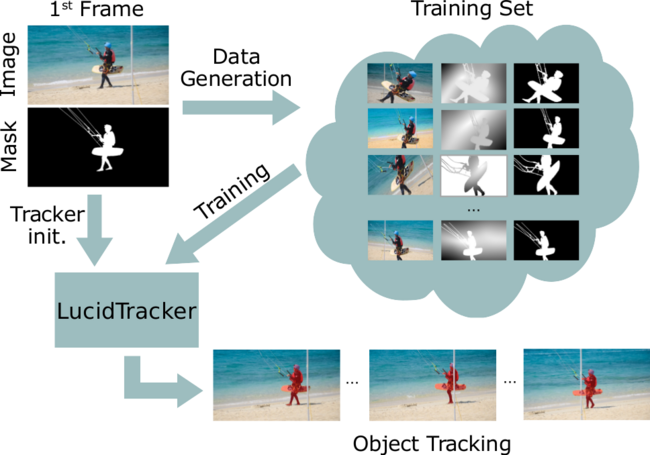

Convolutional networks reach top quality in pixel-level object tracking but require a large amount of training data (1k ∼10k) to deliver such results. We propose a new training strategy which achieves state-of-the-art results across three evaluation datasets while using 20×∼100× less annotated data than competing methods. Instead of using large training sets hoping to generalize across domains, we generate in-domain training data using the provided annotation on the first frame of each video to synthesize (“lucid dream”) plausible future video frames. In-domain per-video training data allows us to train high quality appearance- and motion-based models, as well as tune the post-processing stage. This approach allows to reach competitive results even when training from only a single annotated frame, without ImageNet pre-training. Our results indicate that using a larger training set is not automatically better, and that for the tracking task a smaller training set that is closer to the target domain is more effective. This changes the mindset regarding how many training samples and general “objectness” knowledge are required for the object tracking task.

We took the 2nd place on DAVIS Challenge on Video Object Segmentation 2017!

A Meta-analysis of DAVIS-2017 Video Object Segmentation Challenge by Eddie Smolyansky.

Single Object Tracking

Network architecture

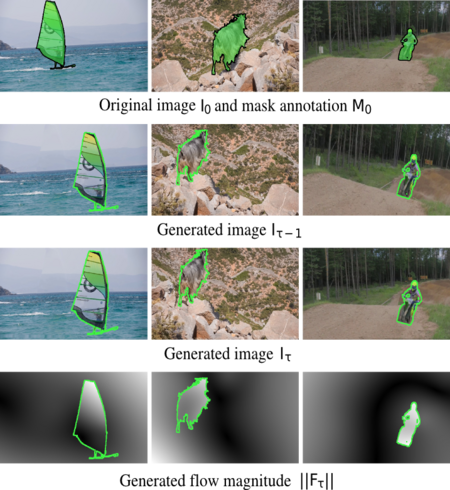

Lucid data dreaming examples

From one annotated frame we generate pairs of images that are plausible future video frames, with known optical flow and masks (green boundaries). Note the inpainted background and foreground/background deformations.

Qualitative results

Frames sampled along the video duration (e.g. 50%: video middle point). Our model is robust to various challenges, such as view changes, fast motion, shape deformations, and out-of-view scenarios.

Video results

Multiple Object Tracking

Network architecture

Lucid data dreams

Video results

Downloads

Pre-computed masks

DAVIS 2016: [trainval set]

DAVIS 2017: [test set] [challenge set]

Source code

Lucid data dreaming augmentation: [github]

For further information or data, please contact Anna Khoreva <khoreva at mpi-inf.mpg.de>.

References

[Khoreva et al., 2017] Lucid Data Dreaming for Multiple Object Tracking, A. Khoreva, R. Benenson, E. Ilg, T. Brox and B. Schiele, arXiv preprint arXiv:1703.09554, 2017.

@inproceedings{LucidDataDreaming_arXiv17, title={Lucid Data Dreaming for Multiple Object Tracking}, author={A. Khoreva and R. Benenson and E. Ilg and T. Brox and B. Schiele}, booktitle={arXiv preprint arXiv: 1703.09554}, year={2017}}

[Khoreva et al., CVPR Workshops 2017] Lucid Data Dreaming for Object Tracking, A. Khoreva, R. Benenson, E. Ilg, T. Brox and B. Schiele. The 2017 DAVIS Challenge on Video Object Segmentation - CVPR Workshops, 2017.

@inproceedings{LucidDataDreaming_CVPR17_workshops, title={Lucid Data Dreaming for Object Tracking}, author={A. Khoreva and R. Benenson and E. Ilg and T. Brox and B. Schiele}, booktitle={The 2017 DAVIS Challenge on Video Object Segmentation - CVPR Workshops}, year={2017}}