Feature Generating Networks for Zero-Shot Learning

Yongqin Xian, Tobias Lorenz, Bernt Schiele, and Zeynep Akata

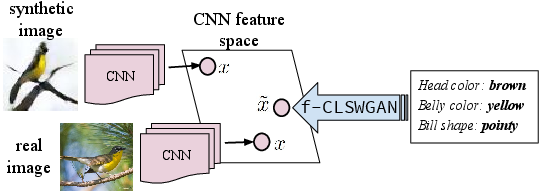

Suffering from the extreme training data imbalance between seen and unseen classes, most of existing state-of-the-art approaches fail to achieve satisfactory results for the challenging generalized zero-shot learning task. To circumvent the need for labeled examples of unseen classes, we propose a novel generative adversarial network~(GAN) that synthesizes CNN features conditioned on class-level semantic information, offering a shortcut directly from a semantic descriptor of a class to a class-conditional feature distribution. Our proposed approach, pairing a Wasserstein GAN with a classification loss, is able to generate sufficiently discriminative CNN features to train softmax classifiers or any multimodal embedding method. Our experimental results demonstrate a significant boost in accuracy over the state of the art on five challenging datasets -- CUB, FLO, SUN, AWA and ImageNet -- in both the zero-shot learning and generalized zero-shot learning settings.

Paper, Supp, Code and Data

- If you find it useful, please cite:

@inproceedings {xianCVPR18, title = {Feature Generating Networks for Zero-Shot Learning}, booktitle = {IEEE Computer Vision and Pattern Recognition (CVPR)}, year = {2018}, author = {Yongqin Xian and Tobias Lorenz and Bernt Schiele and Zeynep Akata} }