People-Tracking-by-Detection and People-Detection-by-Tracking

M. Andriluka, S. Roth, B. Schiele. People-Tracking-by-Detection and People-Detection-by-Tracking.

IEEE Conf. on Computer Vision and Pattern Recognition (CVPR'08), Anchorage, USA, June 2008.

Paper | Slides | Videos | Datasets | Contact Us

Abstract:

Both detection and tracking people are challenging problems, especially in complex real world scenes that commonly involve multiple people, complicated occlusions, and cluttered or even moving backgrounds. People detectors have been shown to be able to locate pedestrians even in complex street scenes, but false positives have remained frequent. The identification of particular individuals has remained challenging as well. Tracking methods are able to find a particular individual in image sequences, but are severely challenged by real-world scenarios such as crowded street scenes. In this paper, we combine the advantages of both detection and tracking in a single framework. The approximate articulation of each person is detected in every frame based on local features that model the appearance of individual body parts. Prior knowledge on possible articulations and temporal coherency within a walking cycle are modeled using a hierarchical Gaussian process latent variable model (hGPLVM). We show how the combination of these results improves hypotheses for position and articulation of each person in several subsequent frames. We present experimental results that demonstrate how this allows to detect and track multiple people in cluttered scenes with reoccurring occlusions.

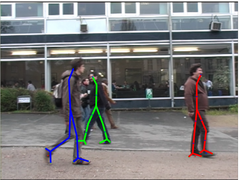

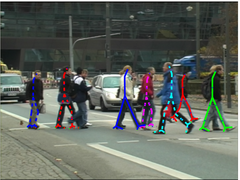

The videos show detection and tracking of people on the "TUD Campus" sequence (left) and "TUD Crossing" sequence (right). Tracks of each person are visualized using unique combination of color and line type. The videos are created with the msmpeg4v2 codec (from ffmpeg).

Training Data:

These are the two datasets used to train our people detector. For each training image annotations of body-part positions as well as detailed segmentation of people are provided.

Test Data:

Test dataset containing 250 images with 311 fully visible people with significant variation in clothing and articulation. This dataset was used to evaluate the performance of single-frame detector.

Sequences:

Two sequences used to evaluate detection and tracking. Annotations are provided for all pedestrians occluded to less then 50%.

Contact:

For further information please contact the authors: Micha Andriluka, Stefan Roth and Bernt Schiele.