Activity Spotting & Composite Activities

Human activity recognition using wearable sensors has been an active research area recently due to its importance for context-aware-systems. Substantial progress has been achieved for applications spanning across different areas, such as the entertainment, industrial or healthcare domains. Some devices are already commercially available, e.g., watches that log the wearer’s motion over weeks to infer fitness levels or the Nintendo Wii gesture controllers. While the recognition of low level activities, such as walking, standing or sitting yields impressive results, recognition of more complex or composed activities, such as cooking or cleaning, is far less researched, and consequently still an open research question. Probably the single most important difficulty to recognize complex activities is the inherent variability in executing such activities by different people and with different durations. As most of today’s activity recognition methods rely on machine learning techniques this variability implies that prohibitive amounts of training data are required for each novel application. In the following different aspects of the thesis are outlined. We start our investigation by activity spotting, revealing occurred activities within a continuos data stream. Such spotted activities are then combined to infer composed activities. As a results, the interdependency between spotted activities and composed activities can be furthermore exploited to improve recognition. The following outlines briefly main topics investigated in the thesis:

Spotting Activities [1]

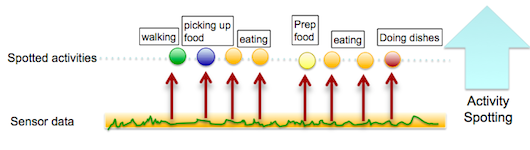

In general, a large "background"-class is represented in human activities, in which activities (we are actually interested in) are buried. To spot these we apply machine learning techniques revealing activity labels with corresponding confidences for potentially interesting parts of the data. Questions investigated are for instance, type of feature, type and number of sensors etc.

Combining spotted activities to infer high-level activities. Given a set of spotted activities in a continuous datastream, we can combine these to composed higher level activities. In line with research in psychology and linguistics on perception of activity, we apply hierarchical models to capture complex activities. Consequently we borrow the term partonomy, describing “part-of-whole” relationships between activities and composites of activities. We explicitly use the term activity events for underlying activities. For high level activities composed of such underlying events, we use the term composite activity.

Improving spotted activities with composite activity models This task will exploit the fact that a composite activity is known, from the previous task, to achieve a more accurate classification of the underlying activity event. We envision to start this task in parallel with the first task and use the given ground truth's high-level activity description in the early phases. As the recognition of composite activities nears completion, these can be plugged in at a later stage. The result will be a probabilistic model that provides likelihoods for actions occurring given the high-level activity, and will be evaluated on its merit by comparing its performance to that of the classification of activity events alone.

Transferring Knowledge [2]

Given a partonomy as mentioned above and following our observations on shared activities between composite activities, we are able to transfer knowledge about the underlying activities that constitute new and unseen composite activities.

References

[1] All for one or one for all? – Combining Heterogeneous Features for Activity Spotting, U. Blanke, B. Schiele, M. Kreil, P. Lukowicz, B. Sick and T. Gruber, 7th IEEE PerCom Workshop on Context Modeling and Reasoning (CoMoRea), April, (2010)

[2] Remember and Transfer what you have Learned - Recognizing Composite Activities based on Activity Spotting, U. Blanke and B. Schiele, IEEE International Symposium on Wearable Computers (ISWC), October, (2010)