Monocular 3D Pose Estimation and Tracking by Detection

M. Andriluka, S. Roth, B. Schiele. Monocular 3D Pose Estimation and Tracking by Detection.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2010), San Francisco, USA, June 2010.

Paper | Slides | Videos | Data | Contact Us

Abstract:

Automatic recovery of 3D human pose from monocular image sequences is a challenging and important research topic with numerous applications. Although current methods are able to recover 3D pose for a single person in controlled environments, they are severely challenged by realworld scenarios, such as crowded street scenes. To address this problem, we propose a three-stage process building on a number of recent advances. The first stage obtains an initial estimate of the 2D articulation and viewpoint of the person from single frames. The second stage allows early data association across frames based on tracking-by-detection. These two stages successfully accumulate the available 2D image evidence into robust estimates of 2D limb positions over short image sequences (= tracklets). The third and final stage uses those tracklet-based estimates as robust image observations to reliably recover 3D pose. We demonstrate state-of-the-art performance on the HumanEva II benchmark, and also show the applicability of our approach to articulated 3D tracking in realistic street conditions.

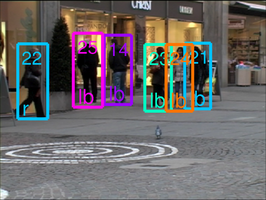

The videos show detection and 2D tracking of people (left) and 3D human pose estimation (right) on the "TUD Stadtmitte" sequence. The videos are created with the msmpeg4v2 codec (from ffmpeg).

Datasets:

The dataset "TUD Multiview Pedestrians" was used in the paper to evaluate single-frame people detection and viewpoint estimation. It contains training, validation and test images in which people are annotated with bounding boxes and one of the eight viewpoints. The "TUD Stadmitte" sequence was used to demonstrate 3D pose estimation performance of our method in real-world street conditions.

New: Thanks to Anton Andriyenko from the GRIS group at TU Darmstadt the annotations of the "TUD Stadtmitte" sequence have been extended to include unique ID's of all people and annotations for the fully occluded people. The GRIS group has also computed the camera calibration. The data is available here.

Contact:

For further information please contact the authors: Micha Andriluka, Stefan Roth and Bernt Schiele.