Eye-Based Human-Computer Interaction

Andreas Bulling

Eye-Based Human-Computer Interaction

Our eyes are a compelling input modality for interacting with computing systems. In contrast to other and more well-established modalities, such as touch or speech, our eyes are involved in nearly everything that we do, anywhere and at any point in time – even while we are sleeping. They also naturally indicate what we are interested in and attending to, and their movements are driven by the situation as well as by our goals, intentions, activities, and personality. Finally, eye movements are also influenced by a range of mental illnesses and medical conditions such as alzheimers, autism, or dementia. Taken together, these characteristics underline the significant information content available in our eyes and the considerable potential of using them for implicit human-computer interaction. For example, a wearable assistant could monitor the visual behavior of an elderly person over a long period of time and provide the means to detect the onset of dementia, therefore increasing the chances of an earlier and more appropriate treatment. In addition, our eyes are the fastest moving external part of the human body and can be repositioned effortlessly and with high precision. This makes the eyes similarly promising for natural, fast, and spontaneous explicit human-computer interaction, i.e., interactions in which users consciously trigger commands to a computer using their eyes.

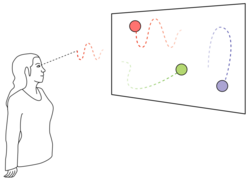

Our method using smooth pursuit matches the user‘s eye movement with the movement of on-screen objects.

Despite considerable advances over the last decade in tracking and analyzing eye movements, previous work on eyebased human-computer interaction has mainly developed the use of the eyes in controlled laboratory settings that involved a single user, a single device, and WIMP-style (windows, icons, mouse, pointer) interactions. Previous work has also not explored the full range of information available in users’ everyday visual behaviour, as described above.

Together with our collaborators, we strive to use the information contained in visual behaviour in all explicit and implicit interactions that users perform with computing systems throughout the day. We envision a future in which our eyes universally enable, enhance, and support such interactions and in which eye-based interfaces fully exploit the wealth of information contained in visual behaviour. In that future, the eyes will no longer remain a niche input modality for specialinterest groups, but the analysis of visual behaviour and attention will move centre stage in all interactions between humans and computers.

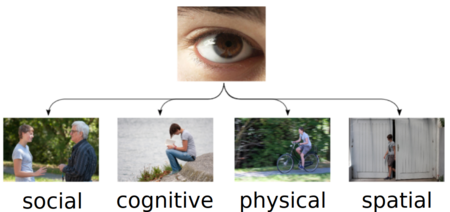

Our method infers contextual cues about different aspects of what we do, by analyzing eye movement patterns over time (from left to right): social (interacting with somebody vs. no interaction), cognitive (concentrated work vs. leisure), physical (physically active vs. not active), and spatial (inside vs. outside a building).

We work towards this vision by

1) developing sensing systems that robustly and accurately capture visual behaviour in ever-changing conditions,

2) developing computational methods for automatic analysis and modeling that are able to cope with the large variability and person-specifi c characteristics in human visual behaviour, and

3) using the information extracted from such behaviour to develop novel humancomputer interfaces that are highly interactive, multimodal, and modeled after natural human-to-human interactions.

For example, we have developed new methods to automatically recognize high-level information about users from their visual behaviour, such as periods of time during which they were physically active, interacting with others, or doing concentrated work. In other work, we have introduced smooth pursuit eye movements (the movements that we perform when following moving objects, such as a car passing by in front of us) for humancomputer interaction. We have demonstrated that smooth pursuit movements can provide spontaneous, natural, and robust means of interacting with ambient displays using gaze.

Andreas Bulling

DEPT. 2 Computer Vision and Multimodal Computing

Phone +49 681 9325-2128

Email bulling mpi-inf.mpg.de